- 16 Nov 2023

- 11 Minutes to read

-

Print

-

DarkLight

-

PDF

What Can CAL Do For You?

- Updated on 16 Nov 2023

- 11 Minutes to read

-

Print

-

DarkLight

-

PDF

Overview

CAL™ provides a way to learn how widespread and relevant a threat is by anonymously leveraging the billions of data points from the many thousands of analysts that use ThreatConnect®. In this article, we’ll cover the insights that CAL provides and then go deeper into how to use that intelligence in your day-to-day analysis, with instructions for both novice and advanced users. For information on all of the areas in ThreatConnect where you can view CAL data for Indicators, see the “Viewing CAL Data for an Indicator” section of ThreatAssess and CAL.

Before You Start

| Minimum Role(s) | Organization role of Read Only User |

|---|---|

| Prerequisites |

|

What Can CAL Do for Me?

At this time, CAL provides insights in three main forms: reputation, Classifiers, and contextual fields. They can each be used to help your analysts and orchestration processes make better decisions faster. Let’s take a look at each of these insights to see how they may make your day easier.

Reputation

CAL generates its own reputation score on a 0–1000 scale, similar to the ThreatAssess algorithm. We have a writeup on how ThreatAssess and CAL play together, but the takeaway is pretty simple: CAL uses its massive data set and our analytics to help provide a baseline reputation score. There are a few things to note about CAL’s reputation analytics:

- For Indicators, we display the CAL reputation score on the Details card of the new Details screen. For Artifacts whose type maps to a ThreatConnect Indicator type, we display this score in the CAL column of the Artifacts card in a Workflow Case and in the CAL section when viewing more details about an Artifact on the Artifacts card.

- We’ve designed ThreatAssess to combine CAL’s opinion with those of your analysts and tailored processes. In other words, CAL’s reputation score is factored into an object’s ThreatAssess score. Of course, this calculation is customizable: System Administrators can configure ThreatAssess to weigh CAL’s opinion a lot, a little, or not at all.

- Reputation scoring isn’t one size fits all. There are elements of relevance and risk to your organization. Our goal with CAL’s reputation algorithm is to provide the best baseline that we can for Indicators.

- Our reputation score is based on lots of data. CAL manages the dynamic collection, curation, and aggregation of lots of data that you simply don’t want to do yourself. It pulls in massive whitelists to help clear the noise out of your workflow. CAL also aggregates all of the reported observations on Indicators to prioritize the threats that are active now.

- Reputation goes beyond the score you see on the Details screen for an Indicator. CAL also uses ThreatConnect’s Indicator Status system to help you maintain uninteresting IOCs for the sake of thoroughness without having them inundate you with false alarms.

Using Reputation

If you’re participating in CAL, it’s already making your life easier! Still, here are a few things to consider if you want to step up your game by incorporating CAL’s reputation insights into your workflow:

Simple

If you have sufficient permissions, you can leverage CAL’s score by weighing it more heavily in the ThreatAssess configuration page, as detailed in the “Configuring ThreatAssess” section of the ThreatConnect Account Administration User Guide. For developing teams, this is extremely helpful while you start to marshal your intelligence processes: CAL can give you some kind of score to start with, and you can focus on triaging the universally critical threats before worrying about creating intelligence of your own.

Intermediate

The Indicator Status feature, located at the top right of an Indicator's Details screen, gives you a way to remove a lot of noise from your system. Again, if you’re participating in CAL, you’re already leveraging its insights on hundreds of millions of Indicators! Leave the CAL Status Lock setting turned off to let CAL set the flag on whether each Indicator should be enabled or disabled as far as piping it to your integrations. Of course, you can adapt your processes to manually set (and lock) that flag for Indicators that you know are or aren’t of interest.

Advanced

To empower our security ninjas, we expose the CAL score via Playbooks. You can build your Playbooks to bin your Indicators (or avoid creating them at all) based on CAL’s score right off the bat. This can be especially helpful when it comes to removing noise from your system or firing off alerts and triage workflows. If CAL has decided something is universally good or bad, take that step out of the equation for your analysts!

Threat Graph

With the release of ThreatConnect version 6.5, we’ve introduced Threat Graph. This feature provides a graph-based interface where you can pivot on Indicator, Group, Case, and Tag associations in ThreatConnect, as well as relationships for Indicators and Groups that exist within a CAL dataset.

Using Threat Graph

In Threat Graph, you can click on Indicator or Group nodes and select Pivot with CAL to explore and visualize Indicator and Group relationships, respectively, that exist in CAL.

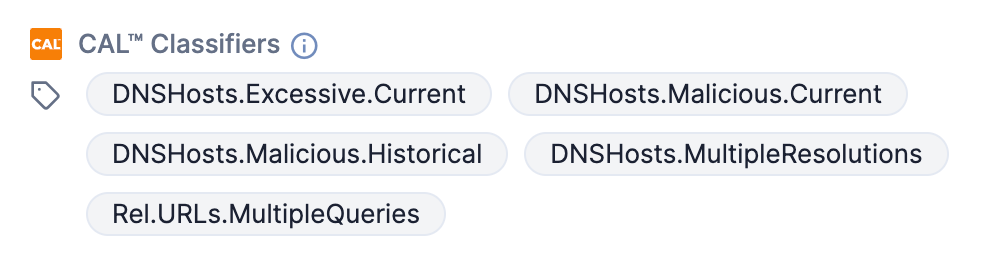

Classifiers

Our analytics apply a series of labels called Classifiers to Indicators that meet certain conditions. These labels are designed to give you a clear, concise vocabulary to understand some of the salient data points about an Indicator. The Classifiers are similar to Tags in ThreatConnect, except that they’re applied by CAL using the totality of its data set and statistical models. Figure 1 shows some Classifiers in the CAL™ Classifiers section of the Details card on the new Details screen. You may also view these Classifiers in the Classification subsection of the CAL Insights section on the Indicator’s Details drawer and on the Indicator Analytics card when viewing Indicator’s legacy Details screen.

As we add more data collection and analytical models, we will continue to expand our vocabulary of Classifiers and fine-tune the conditions that apply them.

Using Classifiers

If you’re not sure how you’d use Classifiers in your day-to-day processes, here are some examples:

Simple

Something as simple as the Executable.Android or Executable.iOS Classifier may help you quickly identify binaries that run on platforms that are outside of your area of responsibility. If your organization doesn’t use Android or iOS devices, then you can easily move along!

Intermediate

If you stumble across a host that CAL identifies as having the IntrusionPhase.C2.Current Classifier, then you may have an active breach on your hands! These Indicators have been classified based on the findings of the ThreatConnect Analytics team, and you can head on over to the CAL Automated Threat Library (ATL) Source to learn more about the associated Threat to determine your next steps.

Advanced

CAL’s DNS monitoring system can let you know about the resolution patterns of certain hosts. If you see an IP address with the DNSHosts.Malicious.Current Classifier, then you can follow it in ThreatConnect and you’ll get notifications when additional Hosts in the system start resolving to it.

Contextual Fields

CAL also provides a series of contextual fields surrounding an Indicator to help you decide what to do next. These fields may come from a variety of sources:

- Aggregated, anonymized data. CAL takes telemetry information from all of our participating instances and aggregates it after removing any identifying information. This allows CAL to provide global counts on key data points, such as how many observations have been reported on the Indicator or how many false positive votes it’s gotten and when.

- Enrichment data. To power its analytics, CAL has access to all sorts of data it’s collected. We want you to have this information, too! You may get certain information as appropriate, such as where a hostname is ranked in the Alexa Top 1 Million domains list or what OSINT feeds reported it and when.

Using Contextual Fields

If you’re not sure how you’d use these contextual fields in your day-to-day processes, here are some examples:

Simple

If you’re looking at an IOC that has a high score, but has a lot of false-positive votes, you may have stumbled into the twilight zone of bad intel! It happens sometimes—our feeds and partners occasionally let benign Indicators slip into our discussions. Sometimes Indicators were bad and then get their act together and get clean. CAL’s global false-positive data can help you better isolate bad Indicators that have gone good.

Intermediate

If an IOC in question has a high number of global observations, then it may be active across the ThreatConnect user base. You may be able to identify the ebb and flow of adversary activity before you’re in the adversary’s sights, benefitting from the anonymized reporting of your peers. Trendline data can help you pinpoint where in time to look if you’re doing retroactive analysis as well.

Advanced

If you’re triaging phishing emails and see an unknown SMTP server, CAL may be able to tell you that it’s owned by Google™ and is part of the GSuite™ Mail Server. Understanding who owns infrastructure—specifically free or rented infrastructure—can help you quickly determine your next steps. Whether you’re picking up the phone to request a takedown from a hosting provider or simply blacklisting an IP address, these are the insights that start to make a difference at scale.

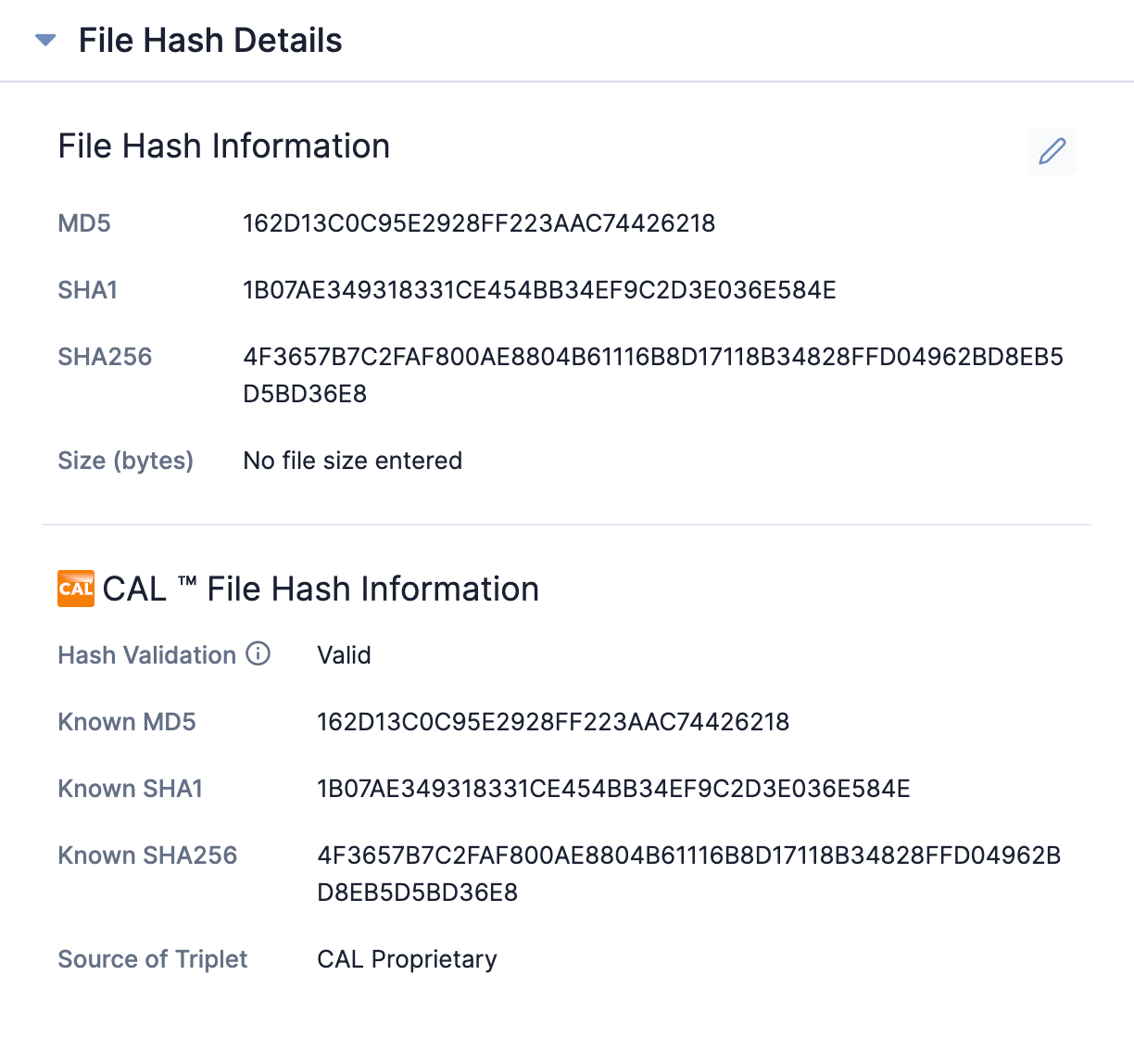

File Enrichment

File Hash Information

We can tell you firsthand that there’s a lot of incomplete and incorrect information regarding which hash goes with which. Putting aside for a moment the demonstrably-possible-but-statistically-insignificant occurrence of MD5 collisions, we were able to scour our datasets to understand which sources provide what flavors of data. We identified three “states” of data:

- Complete triplets are defined as a series of hashes (MD5, SHA1, and SHA256) that correctly belong together. Certain ground-truth sources, such as running the hashing algorithms on the file samples themselves, yield the utmost confidence in this grouping. However, we often don’t have the file samples themselves. So when we’re faced with another data source, such as an open-source feed that just spews a list of hashes, we can compute a gradient of how trustworthy those different sources are.

- Incomplete triplets occur when we get one or two of the hash types mentioned previously. Some data sources simply publish the MD5 and SHA1, for example. That’s still valuable intelligence, but it doesn’t necessarily help us when you need that file’s SHA256 to conduct an enterprise-wide search-and-destroy mission.

- Invalid triplets occur when we get refuting information from a less-trusted source. If, for example, we hashed a file sample ourselves and then a feed reported a different SHA256 for the same SHA1, we would consider that to be an invalid triplet, as it refutes our more trusted source (our own hash).

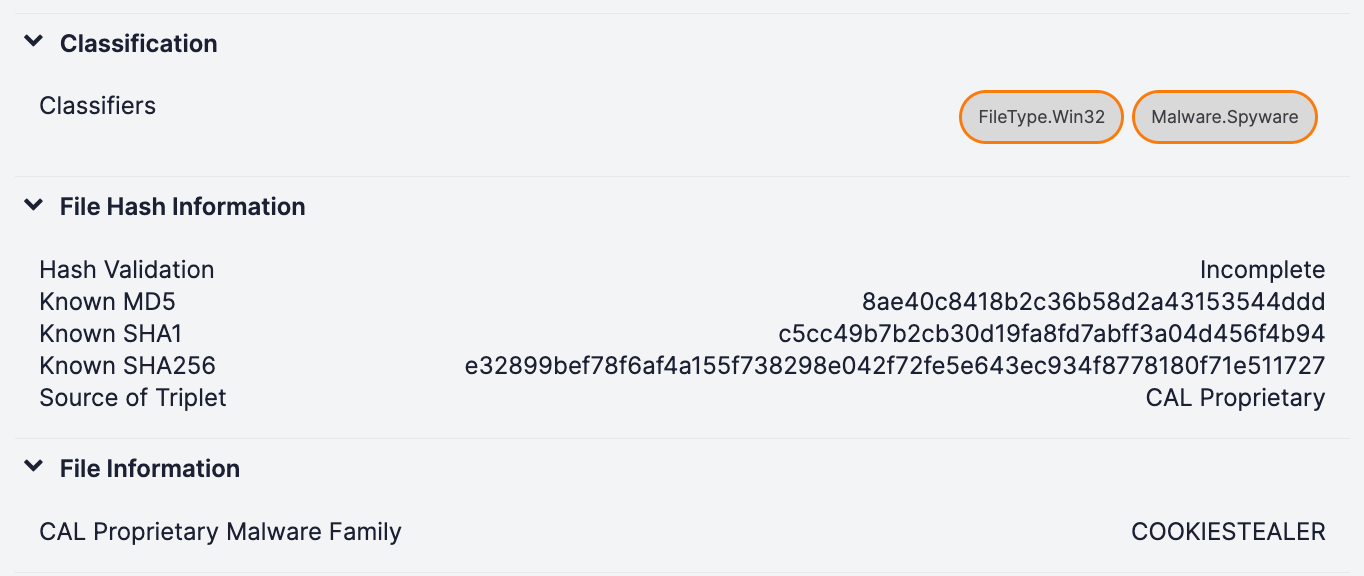

With the release of CAL 2.9, we’ve included file analytics to help identify where file hashes have been seen listed together and which sources can be trusted. You can view this data in the CAL™ File Hash Information section of the File Hash Details card on a File Indicator’s new Details screen (Figure 2)and in the File Hash Information section of the Indicator Analytics card on a File Indicator’s legacy Details screen (Figure 3).

This section outlines what our analytics have derived about a file sample’s various hashes:

- Hash Validation speaks to the state of your submitted File Indicator. For example, if you submitted just one of the three hashes in Figure 3, CAL would let you know that it is Incomplete. We know the other hashes that belong with it and would like you to have that information! Note that if you give us multiple hashes together (e.g., MD5 : SHA1 : SHA256), we’ll choose the most precise one (SHA256) and give you the information we have for that one.

- Known MD5, Known SHA1, and Known SHA256 are there to give you the appropriate hashes (if we have them) based on the “chosen” hash in your submitted Indicator.

- Source of Triplet lets you know where we got the information that led us to believe that those are the partner hashes for your submitted indicator. This information can be from threat feeds, open sources like the NSRL, or a derivation of multiple sources and the numbers we’ve crunched around them, such as in this case (CAL Proprietary).

Armed with these updates, we can start to provide you with the totality of what we know about an Indicator and any of its aliases. Who cares if you call it by its SHA1 name or SHA256—the file is the file. If you can match those names together appropriately, it stands to reason that you can (and should) match any of their corresponding intel and enrichments.

Additional File Information

Part of the problem with fragmented datasets is that you don’t necessarily know what you have. Your industry partners may have given you some malware designations for the MD5’s in which they traffic. Your open-source feeds may tell you something else about that same file by its SHA1 name. So how are you to simply ask, on the scale of millions of Indicators, the obvious question: “What are all the things I know about this file and any of its nicknames?”

When viewing the legacy Details screen for File Indicators in ThreatConnect (Figure 3), you’ll see more enrichments based on the chosen hash from your query. Note the addition of new Classifiers—at a glance, you or your Playbooks can determine that the file you’re looking at is a Windows 32-bit binary (FileType.Win32) and that it’s specifically a spyware subset of the malware designator (Malware.Spyware). You’ll also start to see the outputs of our new analytics with fields like the CAL Proprietary Malware Family field shown in Figure 3.

With all of CAL’s analytical horsepower aligned, we’ve massively stepped up our offering on files. Without knowing anything about a file except for its hash, CAL can immediately deliver improved fidelity for metadata, reputation, and classification.

In Conclusion

CAL has come a long way in making sure that we are answering questions our users have about intelligence, sometimes before they even know to ask about it. By combining our unique data set and domain expertise, we’re starting to discover novel Indicators at a high rate and a high confidence level. By leveraging CAL, you can, too.

Keep in mind that these CAL insights aren’t just available to your human analysts, but also to your Playbooks! Stay tuned as we continue to showcase ways that CAL can drive your orchestration processes automatically, using its reputation and classification analytics to help you move faster and smarter.

ThreatConnect® is a registered trademark, and CAL™ is a trademark, of ThreatConnect, Inc.

G Suite™ and Google™ are trademarks of Google LLC.

20083-01 v.04.B